In a significant move towards enhancing user privacy, the emergence of local AI browsers offers a solution for those wary of sharing personal data with larger tech companies. These browsers enable users to run AI models on their devices, minimizing the risk associated with cloud-based services. The **Puma browser**, available on both **Android** and **iOS**, has gained attention for its ability to facilitate local AI interactions without compromising user data.

The increasing integration of generative AI into everyday applications raises concerns about privacy. Many users question whether they want sensitive conversations or files to be processed through servers owned by companies like **OpenAI** or **Microsoft**. For these users, local AI browsers present a compelling alternative. By allowing AI to operate directly on the device, these browsers eliminate the need for internet connectivity during usage and reduce reliance on external servers.

Introducing Puma: A Privacy-Centric Solution

Puma stands out among local AI browsers by enabling users to choose between alternative search engines, such as **Ecosia** and **DuckDuckGo**, distancing themselves from the data collection practices of larger corporations. Upon installation, the browser comes pre-configured with **Meta’s** open-source **Llama 3.2** model, offering immediate access to AI functionalities that keep user queries private.

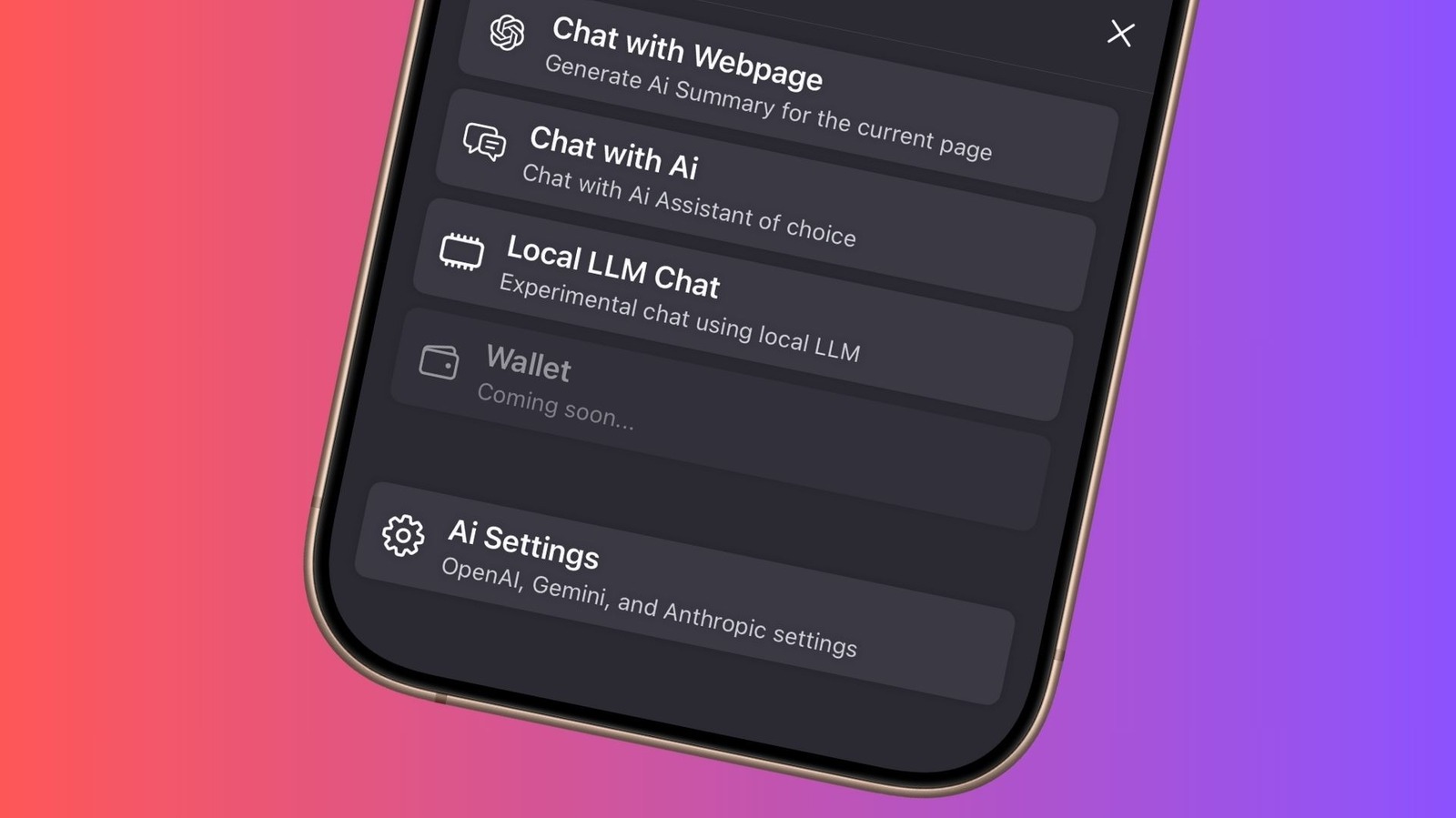

Setting up additional AI models is straightforward, requiring users to navigate to the Local LLM Chat option within the browser. The process involves selecting from a list of available models, including **Mistral**, **Gemma**, and **Qwen**. Each model typically exceeds **1GB** in size, necessitating sufficient storage space on the device. Once downloaded, these models can be employed for various tasks without any data leaving the user’s phone.

Why Choose Local AI Models?

Privacy remains the foremost reason for opting for local AI models over established platforms like **ChatGPT** or **Google’s** AI. Users can maintain control over their data while benefiting from AI capabilities, even in offline scenarios. For instance, when internet access is limited, local AI can still perform tasks such as summarization and knowledge queries without requiring an active connection.

While local AI models excel in privacy, they do come with limitations. Unlike cloud-based systems, they cannot perform advanced features such as image generation. Additionally, the Puma browser lacks customization options for adjusting the AI workload across CPU and GPU resources, which users may find in competing applications.

In summary, the rise of local AI browsers like Puma represents a shift towards user privacy in a digital landscape often dominated by large corporations. As more users express concerns about data security, the demand for solutions that prioritize privacy is likely to grow. The ability to run AI locally not only safeguards personal information but also empowers users to engage with technology on their terms.